Content from Why use a Cluster?

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- Why would I be interested in High Performance Computing (HPC)?

- What can I expect to learn from this course?

Objectives

- Describe what an HPC system is

- Identify how an HPC system could benefit you.

Frequently, research problems that use computing can outgrow the capabilities of the desktop or laptop computer where they started:

- A statistics student wants to cross-validate a model. This involves running the model 1000 times – but each run takes an hour. Running the model on a laptop will take over a month! In this research problem, final results are calculated after all 1000 models have run, but typically only one model is run at a time (in serial) on the laptop. Since each of the 1000 runs is independent of all others, and given enough computers, it’s theoretically possible to run them all at once (in parallel).

- A genomics researcher has been using small datasets of sequence data, but soon will be receiving a new type of sequencing data that is 10 times as large. It’s already challenging to open the datasets on a computer – analyzing these larger datasets will probably crash it. In this research problem, the calculations required might be impossible to parallelize, but a computer with more memory would be required to analyze the much larger future data set.

- An engineer is using a fluid dynamics package that has an option to run in parallel. So far, this option was not used on a desktop. In going from 2D to 3D simulations, the simulation time has more than tripled. It might be useful to take advantage of that option or feature. In this research problem, the calculations in each region of the simulation are largely independent of calculations in other regions of the simulation. It’s possible to run each region’s calculations simultaneously (in parallel), communicate selected results to adjacent regions as needed, and repeat the calculations to converge on a final set of results. In moving from a 2D to a 3D model, both the amount of data and the amount of calculations increases greatly, and it’s theoretically possible to distribute the calculations across multiple computers communicating over a shared network.

In all these cases, access to more (and larger) computers is needed. Those computers should be usable at the same time, solving many researchers’ problems in parallel.

Jargon Busting Presentation

Open the HPC

Jargon Buster in a new tab. To present the content, press

C to open a clone in a separate window,

then press P to toggle presentation

mode.

I’ve Never Used a Server, Have I?

Take a minute and think about which of your daily interactions with a computer may require a remote server or even cluster to provide you with results.

- Checking email: your computer (possibly in your pocket) contacts a remote machine, authenticates, and downloads a list of new messages; it also uploads changes to message status, such as whether you read, marked as junk, or deleted the message. Since yours is not the only account, the mail server is probably one of many in a data center.

- Searching for a phrase online involves comparing your search term against a massive database of all known sites, looking for matches. This “query” operation can be straightforward, but building that database is a monumental task! Servers are involved at every step.

- Searching for directions on a mapping website involves connecting

your

- starting and (B) end points by traversing a graph in search of the “shortest” path by distance, time, expense, or another metric. Converting a map into the right form is relatively simple, but calculating all the possible routes between A and B is expensive.

Checking email could be serial: your machine connects to one server and exchanges data. Searching by querying the database for your search term (or endpoints) could also be serial, in that one machine receives your query and returns the result. However, assembling and storing the full database is far beyond the capability of any one machine. Therefore, these functions are served in parallel by a large, “hyperscale” collection of servers working together.

- High Performance Computing (HPC) typically involves connecting to very large computing systems elsewhere in the world.

- These other systems can be used to do work that would either be impossible or much slower on smaller systems.

- HPC resources are shared by multiple users.

- The standard method of interacting with such systems is via a command line interface.

Content from Connecting to a remote HPC system

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- How do I log in to a remote HPC system?

Objectives

- Configure secure access to a remote HPC system.

- Connect to a remote HPC system.

Secure Connections

The first step in using a cluster is to establish a connection from our laptop to the cluster. When we are sitting at a computer (or standing, or holding it in our hands or on our wrists), we have come to expect a visual display with icons, widgets, and perhaps some windows or applications: a graphical user interface, or GUI. Since computer clusters are remote resources that we connect to over slow or intermittent interfaces (WiFi and VPNs especially), it is more practical to use a command-line interface, or CLI, to send commands as plain-text. If a command returns output, it is printed as plain text as well. The commands we run today will not open a window to show graphical results.

If you have ever opened the Windows Command Prompt or macOS Terminal, you have seen a CLI. If you have already taken The Carpentries’ courses on the UNIX Shell or Version Control, you have used the CLI on your local machine extensively. The only leap to be made here is to open a CLI on a remote machine, while taking some precautions so that other folks on the network can’t see (or change) the commands you’re running or the results the remote machine sends back. We will use the Secure SHell protocol (or SSH) to open an encrypted network connection between two machines, allowing you to send & receive text and data without having to worry about prying eyes.

SSH clients are usually command-line tools, where you provide the

remote machine address as the only required argument. If your username

on the remote system differs from what you use locally, you must provide

that as well. If your SSH client has a graphical front-end, such as

PuTTY or MobaXterm, you will set these arguments before clicking

“connect.” From the terminal, you’ll write something like

ssh userName@hostname, where the argument is just like an

email address: the “@” symbol is used to separate the personal ID from

the address of the remote machine.

When logging in to a laptop, tablet, or other personal device, a username, password, or pattern are normally required to prevent unauthorized access. In these situations, the likelihood of somebody else intercepting your password is low, since logging your keystrokes requires a malicious exploit or physical access. For systems like {{ site.remote.host }} running an SSH server, anybody on the network can log in, or try to. Since usernames are often public or easy to guess, your password is often the weakest link in the security chain. Many clusters therefore forbid password-based login, requiring instead that you generate and configure a public-private key pair with a much stronger password. Even if your cluster does not require it, the next section will guide you through the use of SSH keys and an SSH agent to both strengthen your security and make it more convenient to log in to remote systems.

Better Security With SSH Keys

The Lesson Setup provides instructions for installing a shell application with SSH. If you have not done so already, please open that shell application with a Unix-like command line interface to your system.

SSH keys are an alternative method for authentication to obtain access to remote computing systems. They can also be used for authentication when transferring files or for accessing remote version control systems (such as GitHub). In this section you will create a pair of SSH keys:

- a private key which you keep on your own computer, and

- a public key which can be placed on any remote system you will access.

Private keys are your secure digital passport

A private key that is visible to anyone but you should be considered compromised, and must be destroyed. This includes having improper permissions on the directory it (or a copy) is stored in, traversing any network that is not secure (encrypted), attachment on unencrypted email, and even displaying the key on your terminal window.

Protect this key as if it unlocks your front door. In many ways, it does. {: .caution}

Regardless of the software or operating system you use, please choose a strong password or passphrase to act as another layer of protection for your private SSH key.

Considerations for SSH Key Passwords

When prompted, enter a strong password that you will remember. There are two common approaches to this:

- Create a memorable passphrase with some punctuation and number-for-letter substitutions, 32 characters or longer. Street addresses work well; just be careful of social engineering or public records attacks.

- Use a password manager and its built-in password generator with all character classes, 25 characters or longer. KeePass and BitWarden are two good options.

- Nothing is less secure than a private key with no password. If you skipped password entry by accident, go back and generate a new key pair with a strong password.

SSH Keys on Linux, Mac, MobaXterm, and Windows Subsystem for Linux

Once you have opened a terminal, check for existing SSH keys and filenames since existing SSH keys are overwritten.

If ~/.ssh/id_ed25519 already exists, you will need to

specify a different name for the new key-pair.

Generate a new public-private key pair using the following command,

which will produce a stronger key than the ssh-keygen

default by invoking these flags:

-

-a(default is 16): number of rounds of passphrase derivation; increase to slow down brute force attacks. -

-t(default is rsa): specify the “type” or cryptographic algorithm.ed25519specifies EdDSA with a 256-bit key; it is faster than RSA with a comparable strength. -

-f(default is /home/user/.ssh/id_algorithm): filename to store your private key. The public key filename will be identical, with a.pubextension added.

When prompted, enter a strong password with the above considerations in mind. Note that the terminal will not appear to change while you type the password: this is deliberate, for your security. You will be prompted to type it again, so don’t worry too much about typos.

Take a look in ~/.ssh (use ls ~/.ssh). You

should see two new files:

- your private key (

~/.ssh/id_ed25519): do not share with anyone! - the shareable public key (

~/.ssh/id_ed25519.pub): if a system administrator asks for a key, this is the one to send. It is also safe to upload to websites such as GitHub: it is meant to be seen.

Use RSA for Older Systems

If key generation failed because ed25519 is not available, try using the older (but still strong and trustworthy) RSA cryptosystem. Again, first check for an existing key:

If ~/.ssh/id_rsa already exists, you will need to

specify choose a different name for the new key-pair. Generate it as

above, with the following extra flags:

-

-bsets the number of bits in the key. The default is 2048. EdDSA uses a fixed key length, so this flag would have no effect. -

-o(no default): use the OpenSSH key format, rather than PEM.

When prompted, enter a strong password with the above considerations in mind.

Take a look in ~/.ssh (use ls ~/.ssh). You

should see two new files:

- your private key (

~/.ssh/id_rsa): do not share with anyone! - the shareable public key (

~/.ssh/id_rsa.pub): if a system administrator asks for a key, this is the one to send. It is also safe to upload to websites such as GitHub: it is meant to be seen.

SSH Keys on PuTTY

If you are using PuTTY on Windows, download and use

puttygen to generate the key pair. See the PuTTY

documentation for details.

- Select

EdDSAas the key type. - Select

255as the key size or strength. - Click on the “Generate” button.

- You do not need to enter a comment.

- When prompted, enter a strong password with the above considerations in mind.

- Save the keys in a folder no other users of the system can read.

Take a look in the folder you specified. You should see two new files:

- your private key (

id_ed25519): do not share with anyone! - the shareable public key (

id_ed25519.pub): if a system administrator asks for a key, this is the one to send. It is also safe to upload to websites such as GitHub: it is meant to be seen.

SSH Agent for Easier Key Handling

An SSH key is only as strong as the password used to unlock it, but on the other hand, typing out a complex password every time you connect to a machine is tedious and gets old very fast. This is where the SSH Agent comes in.

Using an SSH Agent, you can type your password for the private key once, then have the Agent remember it for some number of hours or until you log off. Unless some nefarious actor has physical access to your machine, this keeps the password safe, and removes the tedium of entering the password multiple times.

Just remember your password, because once it expires in the Agent, you have to type it in again.

SSH Agents on Linux, macOS, and Windows

Open your terminal application and check if an agent is running:

-

If you get an error like this one,

ERROR

Error connecting to agent: No such file or directory… then you need to launch the agent as follows:

CalloutWhat’s in a

$(...)?The syntax of this SSH Agent command is unusual, based on what we’ve seen in the UNIX Shell lesson. This is because the

ssh-agentcommand creates opens a connection that only you have access to, and prints a series of shell commands that can be used to reach it – but does not execute them!OUTPUT

SSH_AUTH_SOCK=/tmp/ssh-Zvvga2Y8kQZN/agent.131521; export SSH_AUTH_SOCK; SSH_AGENT_PID=131522; export SSH_AGENT_PID; echo Agent pid 131522;The

evalcommand interprets this text output as commands and allows you to access the SSH Agent connection you just created.You could run each line of the

ssh-agentoutput yourself, and achieve the same result. Usingevaljust makes this easier. Otherwise, your agent is already running: don’t mess with it.

Add your key to the agent, with session expiration after 8 hours:

OUTPUT

Enter passphrase for .ssh/id_ed25519:

Identity added: .ssh/id_ed25519

Lifetime set to 86400 secondsFor the duration (8 hours), whenever you use that key, the SSH Agent will provide the key on your behalf without you having to type a single keystroke.

SSH Agent on PuTTY

If you are using PuTTY on Windows, download and use

pageant as the SSH agent. See the PuTTY

documentation.

Transfer Your Public Key

{% if site.remote.portal %} Visit {{ site.remote.portal }}

to upload your SSH public key. (Remember, it’s the one ending in

.pub!)

{% else %} Use the secure copy tool to send your public key to the cluster.

BASH

{{ site.local.prompt }} scp ~/.ssh/id_ed25519.pub {{ site.remote.user }}@{{ site.remote.login }}:~/{% endif %}

Log In to the Cluster

Go ahead and open your terminal or graphical SSH client, then log in

to the cluster. Replace {{ site.remote.user }} with your

username or the one supplied by the instructors.

You may be asked for your password. Watch out: the characters you

type after the password prompt are not displayed on the screen. Normal

output will resume once you press Enter.

You may have noticed that the prompt changed when you logged into the

remote system using the terminal (if you logged in using PuTTY this will

not apply because it does not offer a local terminal). This change is

important because it can help you distinguish on which system the

commands you type will be run when you pass them into the terminal. This

change is also a small complication that we will need to navigate

throughout the workshop. Exactly what is displayed as the prompt (which

conventionally ends in $) in the terminal when it is

connected to the local system and the remote system will typically be

different for every user. We still need to indicate which system we are

entering commands on though so we will adopt the following

convention:

-

{{ site.local.prompt }}when the command is to be entered on a terminal connected to your local computer -

{{ site.remote.prompt }}when the command is to be entered on a terminal connected to the remote system -

$when it really doesn’t matter which system the terminal is connected to.

Looking Around Your Remote Home

Very often, many users are tempted to think of a high-performance

computing installation as one giant, magical machine. Sometimes, people

will assume that the computer they’ve logged onto is the entire

computing cluster. So what’s really happening? What computer have we

logged on to? The name of the current computer we are logged onto can be

checked with the hostname command. (You may also notice

that the current hostname is also part of our prompt!)

OUTPUT

{{ site.remote.host }}So, we’re definitely on the remote machine. Next, let’s find out

where we are by running pwd to print the

working directory.

OUTPUT

{{ site.remote.homedir }}/{{ site.remote.user }}Great, we know where we are! Let’s see what’s in our current directory:

OUTPUT

id_ed25519.pubThe system administrators may have configured your home directory with some helpful files, folders, and links (shortcuts) to space reserved for you on other filesystems. If they did not, your home directory may appear empty. To double-check, include hidden files in your directory listing:

OUTPUT

. .bashrc id_ed25519.pub

.. .sshIn the first column, . is a reference to the current

directory and .. a reference to its parent

({{ site.remote.homedir }}). You may or may not see the

other files, or files like them: .bashrc is a shell

configuration file, which you can edit with your preferences; and

.ssh is a directory storing SSH keys and a record of

authorized connections.

{% unless site.remote.portal %}

Install Your SSH Key

There May Be a Better Way

Policies and practices for handling SSH keys vary between HPC clusters: follow any guidance provided by the cluster administrators or documentation. In particular, if there is an online portal for managing SSH keys, use that instead of the directions outlined here.

If you transferred your SSH public key with scp, you

should see id_ed25519.pub in your home directory. To

“install” this key, it must be listed in a file named

authorized_keys under the .ssh folder.

If the .ssh folder was not listed above, then it does

not yet exist: create it.

Now, use cat to print your public key, but redirect the

output, appending it to the authorized_keys file:

That’s all! Disconnect, then try to log back into the remote: if your key and agent have been configured correctly, you should not be prompted for the password for your SSH key.

{% endunless %}

- An HPC system is a set of networked machines.

- HPC systems typically provide login nodes and a set of worker nodes.

- The resources found on independent (worker) nodes can vary in volume and type (amount of RAM, processor architecture, availability of network mounted filesystems, etc.).

- Files saved on one node are available on all nodes.

Content from Exploring Remote Resources

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- How does my local computer compare to the remote systems?

- How does the login node compare to the compute nodes?

- Are all compute nodes alike?

Objectives

- Survey system resources using

nproc,free, and the queuing system - Compare & contrast resources on the local machine, login node, and worker nodes

- Learn about the various filesystems on the cluster using

df - Find out

whoelse is logged in - Assess the number of idle and occupied nodes

Look Around the Remote System

If you have not already connected to {{ site.remote.name }}, please do so now:

Take a look at your home directory on the remote system:

What’s different between your machine and the remote?

Open a second terminal window on your local computer and run the

ls command (without logging in to {{ site.remote.name }}).

What differences do you see?

Most high-performance computing systems run the Linux operating

system, which is built around the UNIX Filesystem

Hierarchy Standard. Instead of having a separate root for each hard

drive or storage medium, all files and devices are anchored to the

“root” directory, which is /:

OUTPUT

bin etc lib64 proc sbin sys var

boot {{ site.remote.homedir | replace: "/", "" }} mnt root scratch tmp working

dev lib opt run srv usrThe “{{ site.remote.homedir | replace:”/“,”” }}” directory is the one where we generally want to keep all of our files. Other folders on a UNIX OS contain system files and change as you install new software or upgrade your OS.

Using HPC filesystems

On HPC systems, you have a number of places where you can store your files. These differ in both the amount of space allocated and whether or not they are backed up.

- Home – often a network filesystem, data stored here is available throughout the HPC system, and often backed up periodically. Files stored here are typically slower to access, the data is actually stored on another computer and is being transmitted and made available over the network!

- Scratch – typically faster than the networked Home directory, but not usually backed up, and should not be used for long term storage.

- Work – sometimes provided as an alternative to Scratch space, Work is a fast file system accessed over the network. Typically, this will have higher performance than your home directory, but lower performance than Scratch; it may not be backed up. It differs from Scratch space in that files in a work file system are not automatically deleted for you: you must manage the space yourself.

Nodes

Individual computers that compose a cluster are typically called nodes (although you will also hear people call them servers, computers and machines). On a cluster, there are different types of nodes for different types of tasks. The node where you are right now is called the login node, head node, landing pad, or submit node. A login node serves as an access point to the cluster.

As a gateway, the login node should not be used for time-consuming or resource-intensive tasks. You should be alert to this, and check with your site’s operators or documentation for details of what is and isn’t allowed. It is well suited for uploading and downloading files, setting up software, and running tests. Generally speaking, in these lessons, we will avoid running jobs on the login node.

Who else is logged in to the login node?

This may show only your user ID, but there are likely several other people (including fellow learners) connected right now.

Dedicated Transfer Nodes

If you want to transfer larger amounts of data to or from the cluster, some systems offer dedicated nodes for data transfers only. The motivation for this lies in the fact that larger data transfers should not obstruct operation of the login node for anybody else. Check with your cluster’s documentation or its support team if such a transfer node is available. As a rule of thumb, consider all transfers of a volume larger than 500 MB to 1 GB as large. But these numbers change, e.g., depending on the network connection of yourself and of your cluster or other factors.

The real work on a cluster gets done by the compute (or worker) nodes. compute nodes come in many shapes and sizes, but generally are dedicated to long or hard tasks that require a lot of computational resources.

All interaction with the compute nodes is handled by a specialized piece of software called a scheduler (the scheduler used in this lesson is called {{ site.sched.name }}). We’ll learn more about how to use the scheduler to submit jobs next, but for now, it can also tell us more information about the compute nodes.

For example, we can view all of the compute nodes by running the

command {{ site.sched.info }}.

OUTPUT

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

cpubase_bycore_b1* up infinite 4 idle node[1-2],smnode[1-2]

node up infinite 2 idle node[1-2]

smnode up infinite 2 idle smnode[1-2]A lot of the nodes are busy running work for other users: we are not alone here!

There are also specialized machines used for managing disk storage, user authentication, and other infrastructure-related tasks. Although we do not typically logon to or interact with these machines directly, they enable a number of key features like ensuring our user account and files are available throughout the HPC system.

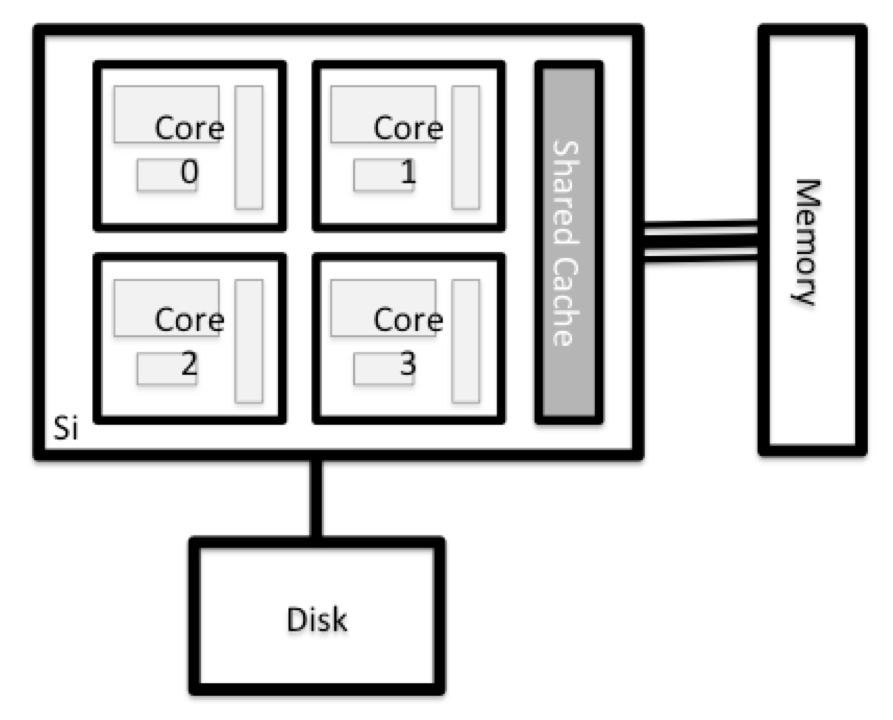

What’s in a Node?

All of the nodes in an HPC system have the same components as your own laptop or desktop: CPUs (sometimes also called processors or cores), memory (or RAM), and disk space. CPUs are a computer’s tool for actually running programs and calculations. Information about a current task is stored in the computer’s memory. Disk refers to all storage that can be accessed like a file system. This is generally storage that can hold data permanently, i.e. data is still there even if the computer has been restarted. While this storage can be local (a hard drive installed inside of it), it is more common for nodes to connect to a shared, remote fileserver or cluster of servers.

There are several ways to do this. Most operating systems have a graphical system monitor, like the Windows Task Manager. More detailed information can be found on the command line:

-

Run system utilities

-

Read from

/proc -

Run system monitor

Explore the Login Node

Now compare the resources of your computer with those of the login node.

BASH

{{ site.local.prompt }} ssh {{ site.remote.user }}@{{ site.remote.login }}

{{ site.remote.prompt }} nproc --all

{{ site.remote.prompt }} free -mYou can get more information about the processors using

lscpu, and a lot of detail about the memory by reading the

file /proc/meminfo:

You can also explore the available filesystems using df

to show disk free space. The

-h flag renders the sizes in a human-friendly format, i.e.,

GB instead of B. The type flag -T shows

what kind of filesystem each resource is.

Different results from df

- The local filesystems (ext, tmp, xfs, zfs) will depend on whether you’re on the same login node (or compute node, later on).

- Networked filesystems (beegfs, cifs, gpfs, nfs, pvfs) will be similar -- but may include {{ site.remote.user }}, depending on how it is mounted.

Compare Your Computer, the Login Node and the Compute Node

Compare your laptop’s number of processors and memory with the numbers you see on the cluster login node and compute node. What implications do you think the differences might have on running your research work on the different systems and nodes?

Compute nodes are usually built with processors that have higher core-counts than the login node or personal computers in order to support highly parallel tasks. Compute nodes usually also have substantially more memory (RAM) installed than a personal computer. More cores tends to help jobs that depend on some work that is easy to perform in parallel, and more, faster memory is key for large or complex numerical tasks.

Differences Between Nodes

Many HPC clusters have a variety of nodes optimized for particular workloads. Some nodes may have larger amount of memory, or specialized resources such as Graphics Processing Units (GPUs or “video cards”).

With all of this in mind, we will now cover how to talk to the cluster’s scheduler, and use it to start running our scripts and programs!

- An HPC system is a set of networked machines.

- HPC systems typically provide login nodes and a set of compute nodes.

- The resources found on independent (worker) nodes can vary in volume and type (amount of RAM, processor architecture, availability of network mounted filesystems, etc.).

- Files saved on shared storage are available on all nodes.

- The login node is a shared machine: be considerate of other users.

Content from EPCC version - Working on a remote HPC system

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- “What is an HPC system?”

- “How does an HPC system work?”

- “How do I log on to a remote HPC system?”

Objectives

- “Connect to a remote HPC system.”

- “Understand the general HPC system architecture.”

What Is an HPC System?

The words “cloud”, “cluster”, and the phrase “high-performance computing” or “HPC” are used a lot in different contexts and with various related meanings. So what do they mean? And more importantly, how do we use them in our work?

The cloud is a generic term commonly used to refer to computing resources that are a) provisioned to users on demand or as needed and b) represent real or virtual resources that may be located anywhere on Earth. For example, a large company with computing resources in Brazil, Zimbabwe and Japan may manage those resources as its own internal cloud and that same company may also utilize commercial cloud resources provided by Amazon or Google. Cloud resources may refer to machines performing relatively simple tasks such as serving websites, providing shared storage, providing web services (such as e-mail or social media platforms), as well as more traditional compute intensive tasks such as running a simulation.

The term HPC system, on the other hand, describes a stand-alone resource for computationally intensive workloads. They are typically comprised of a multitude of integrated processing and storage elements, designed to handle high volumes of data and/or large numbers of floating-point operations (FLOPS) with the highest possible performance. For example, all of the machines on the Top-500 list are HPC systems. To support these constraints, an HPC resource must exist in a specific, fixed location: networking cables can only stretch so far, and electrical and optical signals can travel only so fast.

The word “cluster” is often used for small to moderate scale HPC resources less impressive than the Top-500. Clusters are often maintained in computing centers that support several such systems, all sharing common networking and storage to support common compute intensive tasks.

Logging In

The first step in using a cluster is to establish a connection from our laptop to the cluster. When we are sitting at a computer (or standing, or holding it in our hands or on our wrists), we have come to expect a visual display with icons, widgets, and perhaps some windows or applications: a graphical user interface, or GUI. Since computer clusters are remote resources that we connect to over often slow or laggy interfaces (WiFi and VPNs especially), it is more practical to use a command-line interface, or CLI, in which commands and results are transmitted via text, only. Anything other than text (images, for example) must be written to disk and opened with a separate program.

If you have ever opened the Windows Command Prompt or macOS Terminal, you have seen a CLI. If you have already taken The Carpentries’ courses on the UNIX Shell or Version Control, you have used the CLI on your local machine somewhat extensively. The only leap to be made here is to open a CLI on a remote machine, while taking some precautions so that other folks on the network can’t see (or change) the commands you’re running or the results the remote machine sends back. We will use the Secure SHell protocol (or SSH) to open an encrypted network connection between two machines, allowing you to send & receive text and data without having to worry about prying eyes.

Make sure you have a SSH client installed on your laptop. Refer to

the setup section for more details. SSH clients

are usually command-line tools, where you provide the remote machine

address as the only required argument. If your username on the remote

system differs from what you use locally, you must provide that as well.

If your SSH client has a graphical front-end, such as PuTTY or

MobaXterm, you will set these arguments before clicking “connect.” From

the terminal, you’ll write something like

ssh userName@hostname, where the “@” symbol is used to

separate the two parts of a single argument.

Go ahead and open your terminal or graphical SSH client, then log in to the cluster using your username and the remote computer you can reach from the outside world, EPCC, The University of Edinburgh.

Remember to replace userid with your username or the one

supplied by the instructors. You may be asked for your password. Watch

out: the characters you type after the password prompt are not displayed

on the screen. Normal output will resume once you press

Enter.

Where Are We?

Very often, many users are tempted to think of a high-performance

computing installation as one giant, magical machine. Sometimes, people

will assume that the computer they’ve logged onto is the entire

computing cluster. So what’s really happening? What computer have we

logged on to? The name of the current computer we are logged onto can be

checked with the hostname command. (You may also notice

that the current hostname is also part of our prompt!)

What’s in Your Home Directory?

The system administrators may have configured your home directory

with some helpful files, folders, and links (shortcuts) to space

reserved for you on other filesystems. Take a look around and see what

you can find. Hint: The shell commands pwd and

ls may come in handy. Home directory contents vary from

user to user. Please discuss any differences you spot with your

neighbors.

The deepest layer should differ: userid is uniquely

yours. Are there differences in the path at higher levels?

If both of you have empty directories, they will look identical. If you or your neighbor has used the system before, there may be differences. What are you working on?

Use pwd to print the

working directory path:

You can run ls to list

the directory contents, though it’s possible nothing will show up (if no

files have been provided). To be sure, use the -a flag to

show hidden files, too.

At a minimum, this will show the current directory as .,

and the parent directory as ...

Nodes

Individual computers that compose a cluster are typically called nodes (although you will also hear people call them servers, computers and machines). On a cluster, there are different types of nodes for different types of tasks. The node where you are right now is called the head node, login node, landing pad, or submit node. A login node serves as an access point to the cluster.

As a gateway, it is well suited for uploading and downloading files, setting up software, and running quick tests. Generally speaking, the login node should not be used for time-consuming or resource-intensive tasks. You should be alert to this, and check with your site’s operators or documentation for details of what is and isn’t allowed. In these lessons, we will avoid running jobs on the head node.

Dedicated Transfer Nodes

If you want to transfer larger amounts of data to or from the cluster, some systems offer dedicated nodes for data transfers only. The motivation for this lies in the fact that larger data transfers should not obstruct operation of the login node for anybody else. Check with your cluster’s documentation or its support team if such a transfer node is available. As a rule of thumb, consider all transfers of a volume larger than 500 MB to 1 GB as large. But these numbers change, e.g., depending on the network connection of yourself and of your cluster or other factors.

The real work on a cluster gets done by the worker (or compute) nodes. Worker nodes come in many shapes and sizes, but generally are dedicated to long or hard tasks that require a lot of computational resources.

All interaction with the worker nodes is handled by a specialized piece of software called a scheduler (the scheduler used in this lesson is called Slurm). We’ll learn more about how to use the scheduler to submit jobs next, but for now, it can also tell us more information about the worker nodes.

For example, we can view all of the worker nodes by running the

command sinfo.

OUTPUT

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

standard up 1-00:00:00 27 drain* nid[001029,001050,001149,001363,001366,001391,001552,001568,001620,001642,001669,001672-001675,001688,001690-001691,001747,001751,001783,001793,001812,001832-001835]

standard up 1-00:00:00 5 down* nid[001024,001026,001064,001239,001898]

standard up 1-00:00:00 8 drain nid[001002,001028,001030-001031,001360-001362,001745]

standard up 1-00:00:00 945 alloc nid[001000-001001,001003-001023,001025,001027,001032-001037,001040-001049,001051-001063,001065-001108,001110-001145,001147,001150-001238,001240-001264,001266-001271,001274-001334,001337-001359,001364-001365,001367-001390,001392-001551,001553-001567,001569-001619,001621-001637,001639-001641,001643-001668,001670-001671,001676,001679-001687,001692-001734,001736-001744,001746,001748-001750,001752-001782,001784-001792,001794-001811,001813-001824,001826-001831,001836-001890,001892-001897,001899-001918,001920,001923-001934,001936-001945,001947-001965,001967-001981,001984-001991,002006-002023]

standard up 1-00:00:00 37 resv nid[001038-001039,001109,001146,001148,001265,001272-001273,001335-001336,001638,001677-001678,001735,001891,001919,001921-001922,001935,001946,001966,001982-001983,001992-002005] There are also specialized machines used for managing disk storage, user authentication, and other infrastructure-related tasks. Although we do not typically logon to or interact with these machines directly, they enable a number of key features like ensuring our user account and files are available throughout the HPC system.

What's in a Node?

All of the nodes in an HPC system have the same components as your own laptop or desktop: CPUs (sometimes also called processors or cores), memory (or RAM), and disk space. CPUs are a computer’s tool for actually running programs and calculations. Information about a current task is stored in the computer’s memory. Disk refers to all storage that can be accessed like a file system. This is generally storage that can hold data permanently, i.e. data is still there even if the computer has been restarted. While this storage can be local (a hard drive installed inside of it), it is more common for nodes to connect to a shared, remote fileserver or cluster of servers.

There are several ways to do this. Most operating systems have a graphical system monitor, like the Windows Task Manager. More detailed information can sometimes be found on the command line. For example, some of the commands used on a Linux system are:

Run system utilities

Read from /proc

Run system monitor

Explore the login node

Now compare the resources of your computer with those of the head node.

BASH

[user@laptop ~]$ ssh userid@login.archer2.ac.uk

userid@ln03:~> nproc --all

userid@ln03:~> free -mYou can get more information about the processors using

lscpu, and a lot of detail about the memory by reading the

file /proc/meminfo:

You can also explore the available filesystems using df

to show disk free space. The

-h flag renders the sizes in a human-friendly format, i.e.,

GB instead of B. The type flag -T shows

what kind of filesystem each resource is.

The local filesystems (ext, tmp, xfs, zfs) will depend on whether you’re on the same login node (or compute node, later on). Networked filesystems (beegfs, cifs, gpfs, nfs, pvfs) will be similar — but may include userid, depending on how it is mounted.

Compare Your Computer, the login node and the compute node

Compare your laptop’s number of processors and memory with the numbers you see on the cluster head node and worker node. Discuss the differences with your neighbor.

What implications do you think the differences might have on running your research work on the different systems and nodes?

Differences Between Nodes

Many HPC clusters have a variety of nodes optimized for particular workloads. Some nodes may have larger amount of memory, or specialized resources such as Graphical Processing Units (GPUs).

With all of this in mind, we will now cover how to talk to the cluster’s scheduler, and use it to start running our scripts and programs!

- “An HPC system is a set of networked machines.”

- “HPC systems typically provide login nodes and a set of worker nodes.”

- “The resources found on independent (worker) nodes can vary in volume and type (amount of RAM, processor architecture, availability of network mounted filesystems, etc.).”

- “Files saved on one node are available on all nodes.”

Content from Scheduler Fundamentals

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- What is a scheduler and why does a cluster need one?

- How do I launch a program to run on a compute node in the cluster?

- How do I capture the output of a program that is run on a node in the cluster?

Objectives

- Submit a simple script to the cluster.

- Monitor the execution of jobs using command line tools.

- Inspect the output and error files of your jobs.

- Find the right place to put large datasets on the cluster.

Job Scheduler

An HPC system might have thousands of nodes and thousands of users. How do we decide who gets what and when? How do we ensure that a task is run with the resources it needs? This job is handled by a special piece of software called the scheduler. On an HPC system, the scheduler manages which jobs run where and when.

The following illustration compares these tasks of a job scheduler to a waiter in a restaurant. If you can relate to an instance where you had to wait for a while in a queue to get in to a popular restaurant, then you may now understand why sometimes your job do not start instantly as in your laptop.

The scheduler used in this lesson is {{ site.sched.name }}. Although {{ site.sched.name }} is not used everywhere, running jobs is quite similar regardless of what software is being used. The exact syntax might change, but the concepts remain the same.

Running a Batch Job

The most basic use of the scheduler is to run a command non-interactively. Any command (or series of commands) that you want to run on the cluster is called a job, and the process of using a scheduler to run the job is called batch job submission.

In this case, the job we want to run is a shell script – essentially a text file containing a list of UNIX commands to be executed in a sequential manner. Our shell script will have three parts:

- On the very first line, add

{{ site.remote.bash_shebang }}. The#!(pronounced “hash-bang” or “shebang”) tells the computer what program is meant to process the contents of this file. In this case, we are telling it that the commands that follow are written for the command-line shell (what we’ve been doing everything in so far). - Anywhere below the first line, we’ll add an

echocommand with a friendly greeting. When run, the shell script will print whatever comes afterechoin the terminal.-

echo -nwill print everything that follows, without ending the line by printing the new-line character.

-

- On the last line, we’ll invoke the

hostnamecommand, which will print the name of the machine the script is run on.

OUTPUT

{{ site.remote.bash_shebang }}

echo -n "This script is running on "

hostnameCreating Our Test Job

Run the script. Does it execute on the cluster or just our login node?

This script ran on the login node, but we want to take advantage of

the compute nodes: we need the scheduler to queue up

example-job.sh to run on a compute node.

To submit this task to the scheduler, we use the

{{ site.sched.submit.name }} command. This creates a

job which will run the script when dispatched

to a compute node which the queuing system has identified as being

available to perform the work.

BASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.shOUTPUT

Submitted batch job 9And that’s all we need to do to submit a job. Our work is done – now

the scheduler takes over and tries to run the job for us. While the job

is waiting to run, it goes into a list of jobs called the

queue. To check on our job’s status, we check the queue using

the command

{{ site.sched.status }} {{ site.sched.flag.user }}.

OUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

9 cpubase_b example- user01 R 0:05 1 node1We can see all the details of our job, most importantly that it is in

the R or RUNNING state. Sometimes our jobs

might need to wait in a queue (PENDING) or have an error

(E).

Where’s the Output?

On the login node, this script printed output to the terminal – but

now, when {{ site.sched.status }} shows the job has

finished, nothing was printed to the terminal.

Cluster job output is typically redirected to a file in the directory

you launched it from. Use ls to find and cat

to read the file.

Customising a Job

The job we just ran used all of the scheduler’s default options. In a real-world scenario, that’s probably not what we want. The default options represent a reasonable minimum. Chances are, we will need more cores, more memory, more time, among other special considerations. To get access to these resources we must customize our job script.

Comments in UNIX shell scripts (denoted by #) are

typically ignored, but there are exceptions. For instance the special

#! comment at the beginning of scripts specifies what

program should be used to run it (you’ll typically see

{{ site.local.bash_shebang }}). Schedulers like {{

site.sched.name }} also have a special comment used to denote special

scheduler-specific options. Though these comments differ from scheduler

to scheduler, {{ site.sched.name }}’s special comment is

{{ site.sched.comment }}. Anything following the

{{ site.sched.comment }} comment is interpreted as an

instruction to the scheduler.

Let’s illustrate this by example. By default, a job’s name is the

name of the script, but the {{ site.sched.flag.name }}

option can be used to change the name of a job. Add an option to the

script:

OUTPUT

{{ site.remote.bash_shebang }}

{{ site.sched.comment }} {{ site.sched.flag.name }} hello-world

echo -n "This script is running on "

hostnameSubmit the job and monitor its status:

BASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.sh

{{ site.remote.prompt }} {{ site.sched.status }} {{ site.sched.flag.user }}OUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

10 cpubase_b hello-wo user01 R 0:02 1 node1Fantastic, we’ve successfully changed the name of our job!

Resource Requests

What about more important changes, such as the number of cores and memory for our jobs? One thing that is absolutely critical when working on an HPC system is specifying the resources required to run a job. This allows the scheduler to find the right time and place to schedule our job. If you do not specify requirements (such as the amount of time you need), you will likely be stuck with your site’s default resources, which is probably not what you want.

The following are several key resource requests:

--ntasks=<ntasks>or-n <ntasks>: How many CPU cores does your job need, in total?--time <days-hours:minutes:seconds>or-t <days-hours:minutes:seconds>: How much real-world time (walltime) will your job take to run? The<days>part can be omitted.--mem=<megabytes>: How much memory on a node does your job need in megabytes? You can also specify gigabytes using by adding a little “g” afterwards (example:--mem=5g)--nodes=<nnodes>or-N <nnodes>: How many separate machines does your job need to run on? Note that if you setntasksto a number greater than what one machine can offer, {{ site.sched.name }} will set this value automatically.

Note that just requesting these resources does not make your job run faster, nor does it necessarily mean that you will consume all of these resources. It only means that these are made available to you. Your job may end up using less memory, or less time, or fewer nodes than you have requested, and it will still run.

It’s best if your requests accurately reflect your job’s requirements. We’ll talk more about how to make sure that you’re using resources effectively in a later episode of this lesson.

Submitting Resource Requests

Modify our hostname script so that it runs for a minute,

then submit a job for it on the cluster.

OUTPUT

{{ site.remote.bash_shebang }}

{{ site.sched.comment }} {{ site.sched.flag.time }} 00:01 # timeout in HH:MM

echo -n "This script is running on "

sleep 20 # time in seconds

hostnameBASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.shWhy are the {{ site.sched.name }} runtime and sleep time

not identical?

Resource requests are typically binding. If you exceed them, your job will be killed. Let’s use wall time as an example. We will request 1 minute of wall time, and attempt to run a job for two minutes.

OUTPUT

{{ site.remote.bash_shebang }}

{{ site.sched.comment }} {{ site.sched.flag.name }} long_job

{{ site.sched.comment }} {{ site.sched.flag.time }} 00:01 # timeout in HH:MM

echo "This script is running on ... "

sleep 240 # time in seconds

hostnameSubmit the job and wait for it to finish. Once it is has finished, check the log file.

BASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.sh

{{ site.remote.prompt }} {{ site.sched.status }} {{ site.sched.flag.user }}OUTPUT

This script is running on ...

slurmstepd: error: *** JOB 12 ON node1 CANCELLED AT 2021-02-19T13:55:57

DUE TO TIME LIMIT ***Our job was killed for exceeding the amount of resources it requested. Although this appears harsh, this is actually a feature. Strict adherence to resource requests allows the scheduler to find the best possible place for your jobs. Even more importantly, it ensures that another user cannot use more resources than they’ve been given. If another user messes up and accidentally attempts to use all of the cores or memory on a node, {{ site.sched.name }} will either restrain their job to the requested resources or kill the job outright. Other jobs on the node will be unaffected. This means that one user cannot mess up the experience of others, the only jobs affected by a mistake in scheduling will be their own.

Cancelling a Job

Sometimes we’ll make a mistake and need to cancel a job. This can be

done with the {{ site.sched.del }} command. Let’s submit a

job and then cancel it using its job number (remember to change the

walltime so that it runs long enough for you to cancel it before it is

killed!).

BASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.sh

{{ site.remote.prompt }} {{ site.sched.status }} {{ site.sched.flag.user }}OUTPUT

Submitted batch job 13

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

13 cpubase_b long_job user01 R 0:02 1 node1Now cancel the job with its job number (printed in your terminal). A clean return of your command prompt indicates that the request to cancel the job was successful.

BASH

{{ site.remote.prompt }} {{site.sched.del }} 38759

# It might take a minute for the job to disappear from the queue...

{{ site.remote.prompt }} {{ site.sched.status }} {{ site.sched.flag.user }}OUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)Cancelling multiple jobs

We can also cancel all of our jobs at once using the -u

option. This will delete all jobs for a specific user (in this case,

yourself). Note that you can only delete your own jobs.

Try submitting multiple jobs and then cancelling them all.

First, submit a trio of jobs:

BASH

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.sh

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.sh

{{ site.remote.prompt }} {{ site.sched.submit.name }} {% if site.sched.submit.options != '' %}{{ site.sched.submit.options }} {% endif %}example-job.shThen, cancel them all:

Other Types of Jobs

Up to this point, we’ve focused on running jobs in batch mode. {{ site.sched.name }} also provides the ability to start an interactive session.

There are very frequently tasks that need to be done interactively.

Creating an entire job script might be overkill, but the amount of

resources required is too much for a login node to handle. A good

example of this might be building a genome index for alignment with a

tool like HISAT2.

Fortunately, we can run these types of tasks as a one-off with

{{ site.sched.interactive }}.

{{ site.sched.interactive }} runs a single command on

the cluster and then exits. Let’s demonstrate this by running the

hostname command with

{{ site.sched.interactive }}. (We can cancel an

{{ site.sched.interactive }} job with

Ctrl-c.)

OUTPUT

{{ site.remote.node }}{{ site.sched.interactive }} accepts all of the same

options as {{ site.sched.submit.name }}. However, instead

of specifying these in a script, these options are specified on the

command-line when starting a job. To submit a job that uses 2 CPUs for

instance, we could use the following command:

OUTPUT

This job will use 2 CPUs.

This job will use 2 CPUs.Typically, the resulting shell environment will be the same as that

for {{ site.sched.submit.name }}.

Interactive jobs

Sometimes, you will need a lot of resources for interactive use.

Perhaps it’s our first time running an analysis or we are attempting to

debug something that went wrong with a previous job. Fortunately, {{

site.sched.name }} makes it easy to start an interactive job with

{{ site.sched.interactive }}:

You should be presented with a bash prompt. Note that the prompt will

likely change to reflect your new location, in this case the compute

node we are logged on. You can also verify this with

hostname.

Creating remote graphics

To see graphical output inside your jobs, you need to use X11

forwarding. To connect with this feature enabled, use the

-Y option when you login with the ssh command,

e.g.,

ssh -Y {{ site.remote.user }}@{{ site.remote.login }}.

To demonstrate what happens when you create a graphics window on the

remote node, use the xeyes command. A relatively adorable

pair of eyes should pop up (press Ctrl-C to stop). If you

are using a Mac, you must have installed XQuartz (and restarted your

computer) for this to work.

If your cluster has the slurm-spank-x11

plugin installed, you can ensure X11 forwarding within interactive jobs

by using the --x11 option for

{{ site.sched.interactive }} with the command

{{ site.sched.interactive }} --x11 --pty bash.

When you are done with the interactive job, type exit to

quit your session.

- The scheduler handles how compute resources are shared between users.

- A job is just a shell script.

- Request slightly more resources than you will need.

Content from HPCC version - Scheduler Fundamentals

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- What is a scheduler and why does a cluster need one?

- How do I launch a program to run on a compute node in the cluster?

- How do I capture the output of a program that is run on a node in the cluster?

Objectives

- Submit a simple script to the cluster.

- Monitor the execution of jobs using command line tools.

- Inspect the output and error files of your jobs.

- Find the right place to put large datasets on the cluster.

Job Scheduler

An HPC system might have thousands of nodes and thousands of users. How do we decide who gets what and when? How do we ensure that a task is run with the resources it needs? This job is handled by a special piece of software called the scheduler. On an HPC system, the scheduler manages which jobs run where and when.

The following illustration compares these tasks of a job scheduler to a waiter in a restaurant. If you can relate to an instance where you had to wait for a while in a queue to get in to a popular restaurant, then you may now understand why sometimes your job do not start instantly as in your laptop.

The scheduler used in this lesson is Slurm. Although Slurm is not used everywhere, running jobs is quite similar regardless of what software is being used. The exact syntax might change, but the concepts remain the same.

Running a Batch Job

The most basic use of the scheduler is to run a command non-interactively. Any command (or series of commands) that you want to run on the cluster is called a job, and the process of using a scheduler to run the job is called batch job submission.

In this case, the job we want to run is a shell script – essentially a text file containing a list of UNIX commands to be executed in a sequential manner. Our shell script will have three parts:

- On the very first line, add

#!/bin/bash. The#!(pronounced “hash-bang” or “shebang”) tells the computer what program is meant to process the contents of this file. In this case, we are telling it that the commands that follow are written for the command-line shell (what we’ve been doing everything in so far). - Anywhere below the first line, we’ll add an

echocommand with a friendly greeting. When run, the shell script will print whatever comes afterechoin the terminal.-

echo -nwill print everything that follows, without ending the line by printing the new-line character.

-

- On the last line, we’ll invoke the

hostnamecommand, which will print the name of the machine the script is run on.

OUTPUT

#!/bin/bash

echo -n "This script is running on "

hostnameCreating Our Test Job

Run the script. Does it execute on the cluster or just our login node?

This script ran on the login node, but we want to take advantage of

the compute nodes: we need the scheduler to queue up

example-job.sh to run on a compute node.

To submit this task to the scheduler, we use the `` command. This creates a job which will run the script when dispatched to a compute node which the queuing system has identified as being available to perform the work.

BASH

# yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]sbatchr config$sched$submit$options` example-job.shOUTPUT

Submitted batch job 7And that’s all we need to do to submit a job. Our work is done – now

the scheduler takes over and tries to run the job for us. While the job

is waiting to run, it goes into a list of jobs called the

queue. To check on our job’s status, we check the queue using

the command squeue -u yourUsername.

OUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

9 cpubase_b example- user01 R 0:05 1 node1We can see all the details of our job, most importantly that it is in

the R or RUNNING state. Sometimes our jobs

might need to wait in a queue (PENDING) or have an error

(E).

Where’s the Output?

On the login node, this script printed output to the terminal – but

now, when squeue shows the job has finished, nothing was

printed to the terminal.

Cluster job output is typically redirected to a file in the directory

you launched it from. Use ls to find and cat

to read the file.

Customising a Job

The job we just ran used all of the scheduler’s default options. In a real-world scenario, that’s probably not what we want. The default options represent a reasonable minimum. Chances are, we will need more cores, more memory, more time, among other special considerations. To get access to these resources we must customize our job script.

Comments in UNIX shell scripts (denoted by #) are

typically ignored, but there are exceptions. For instance the special

#! comment at the beginning of scripts specifies what

program should be used to run it (you’ll typically see

#!/usr/bin/env bash). Schedulers like Slurm also have a

special comment used to denote special scheduler-specific options.

Though these comments differ from scheduler to scheduler, Slurm’s

special comment is #SBATCH. Anything following the

#SBATCH comment is interpreted as an instruction to the

scheduler.

Let’s illustrate this by example. By default, a job’s name is the

name of the script, but the -J option can be used to change

the name of a job. Add an option to the script:

OUTPUT

#!/bin/bash

#SBATCH-Jhello-world

echo -n "This script is running on "

hostnameSubmit the job and monitor its status:

BASH

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]squeue -u yourUsernameOUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

10 cpubase_b hello-wo user01 R 0:02 1 node1Fantastic, we’ve successfully changed the name of our job!

Resource Requests

What about more important changes, such as the number of cores and memory for our jobs? One thing that is absolutely critical when working on an HPC system is specifying the resources required to run a job. This allows the scheduler to find the right time and place to schedule our job. If you do not specify requirements (such as the amount of time you need), you will likely be stuck with your site’s default resources, which is probably not what you want.

The following are several key resource requests:

--ntasks=<ntasks>or-n <ntasks>: How many CPU cores does your job need, in total?--time <days-hours:minutes:seconds>or-t <days-hours:minutes:seconds>: How much real-world time (walltime) will your job take to run? The<days>part can be omitted.--mem=<megabytes>: How much memory on a node does your job need in megabytes? You can also specify gigabytes using by adding a little “g” afterwards (example:--mem=5g)--nodes=<nnodes>or-N <nnodes>: How many separate machines does your job need to run on? Note that if you setntasksto a number greater than what one machine can offer, Slurm will set this value automatically.

Note that just requesting these resources does not make your job run faster, nor does it necessarily mean that you will consume all of these resources. It only means that these are made available to you. Your job may end up using less memory, or less time, or fewer nodes than you have requested, and it will still run.

It’s best if your requests accurately reflect your job’s requirements. We’ll talk more about how to make sure that you’re using resources effectively in a later episode of this lesson.

Submitting Resource Requests

Modify our hostname script so that it runs for a minute,

then submit a job for it on the cluster.

OUTPUT

#!/bin/bash

#SBATCH-t00:01 # timeout in HH:MM

echo -n "This script is running on "

sleep 20 # time in seconds

hostnameWhy are the Slurm runtime and sleep time not

identical?

Resource requests are typically binding. If you exceed them, your job will be killed. Let’s use wall time as an example. We will request 1 minute of wall time, and attempt to run a job for two minutes.

OUTPUT

#!/bin/bash

#SBATCH-Jlong_job

#SBATCH-t00:01 # timeout in HH:MM

echo "This script is running on ... "

sleep 240 # time in seconds

hostnameSubmit the job and wait for it to finish. Once it is has finished, check the log file.

BASH

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]squeue -u yourUsernameOUTPUT

This script is running on ...

slurmstepd: error: *** JOB 12 ON node1 CANCELLED AT 2021-02-19T13:55:57

DUE TO TIME LIMIT ***Our job was killed for exceeding the amount of resources it requested. Although this appears harsh, this is actually a feature. Strict adherence to resource requests allows the scheduler to find the best possible place for your jobs. Even more importantly, it ensures that another user cannot use more resources than they’ve been given. If another user messes up and accidentally attempts to use all of the cores or memory on a node, Slurm will either restrain their job to the requested resources or kill the job outright. Other jobs on the node will be unaffected. This means that one user cannot mess up the experience of others, the only jobs affected by a mistake in scheduling will be their own.

Cancelling a Job

Sometimes we’ll make a mistake and need to cancel a job. This can be

done with the scancel command. Let’s submit a job and then

cancel it using its job number (remember to change the walltime so that

it runs long enough for you to cancel it before it is killed!).

BASH

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]squeue -u yourUsernameOUTPUT

Submitted batch job 13

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

13 cpubase_b long_job user01 R 0:02 1 node1Now cancel the job with its job number (printed in your terminal). A clean return of your command prompt indicates that the request to cancel the job was successful.

BASH

yourUsername@login1 ~]{{site.sched.del }} 38759

# It might take a minute for the job to disappear from the queue...

yourUsername@login1 ~]squeue -u yourUsernameOUTPUT

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)Cancelling multiple jobs

We can also cancel all of our jobs at once using the -u

option. This will delete all jobs for a specific user (in this case,

yourself). Note that you can only delete your own jobs.

Try submitting multiple jobs and then cancelling them all.

First, submit a trio of jobs:

BASH

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.sh

yourUsername@login1 ~]sbatch{% if site.sched.submit.options != '' %} {% endif %}example-job.shThen, cancel them all:

Other Types of Jobs

Up to this point, we’ve focused on running jobs in batch mode. Slurm also provides the ability to start an interactive session.

There are very frequently tasks that need to be done interactively.

Creating an entire job script might be overkill, but the amount of

resources required is too much for a login node to handle. A good

example of this might be building a genome index for alignment with a

tool like HISAT2.

Fortunately, we can run these types of tasks as a one-off with

srun.

srun runs a single command on the cluster and then

exits. Let’s demonstrate this by running the hostname

command with srun. (We can cancel an srun job

with Ctrl-c.)

OUTPUT

smnode1srun accepts all of the same options as ``. However,

instead of specifying these in a script, these options are specified on

the command-line when starting a job. To submit a job that uses 2 CPUs

for instance, we could use the following command:

OUTPUT

This job will use 2 CPUs.

This job will use 2 CPUs.Typically, the resulting shell environment will be the same as that for ``.

Interactive jobs

Sometimes, you will need a lot of resources for interactive use.

Perhaps it’s our first time running an analysis or we are attempting to

debug something that went wrong with a previous job. Fortunately, Slurm

makes it easy to start an interactive job with srun:

You should be presented with a bash prompt. Note that the prompt will

likely change to reflect your new location, in this case the compute

node we are logged on. You can also verify this with

hostname.

Creating remote graphics

To see graphical output inside your jobs, you need to use X11

forwarding. To connect with this feature enabled, use the

-Y option when you login with the ssh command,

e.g., ssh -Y yourUsername@cluster.hpc-carpentry.org.

To demonstrate what happens when you create a graphics window on the

remote node, use the xeyes command. A relatively adorable

pair of eyes should pop up (press Ctrl-C to stop). If you

are using a Mac, you must have installed XQuartz (and restarted your

computer) for this to work.

If your cluster has the slurm-spank-x11

plugin installed, you can ensure X11 forwarding within interactive jobs

by using the --x11 option for srun with the

command srun --x11 --pty bash.

When you are done with the interactive job, type exit to

quit your session.

- The scheduler handles how compute resources are shared between users.

- A job is just a shell script.

- Request slightly more resources than you will need.

Content from EPCC version - Working with the scheduler

Last updated on 2026-02-17 | Edit this page

Overview

Questions

- “What is a scheduler and why are they used?”

- “How do I launch a program to run on any one node in the cluster?”

- “How do I capture the output of a program that is run on a node in the cluster?”

Objectives

- “Run a simple Hello World style program on the cluster.”

- “Submit a simple Hello World style script to the cluster.”

- “Use the batch system command line tools to monitor the execution of your job.”

- “Inspect the output and error files of your jobs.”

Job Scheduler

An HPC system might have thousands of nodes and thousands of users. How do we decide who gets what and when? How do we ensure that a task is run with the resources it needs? This job is handled by a special piece of software called the scheduler. On an HPC system, the scheduler manages which jobs run where and when.

The following illustration compares these tasks of a job scheduler to a waiter in a restaurant. If you can relate to an instance where you had to wait for a while in a queue to get in to a popular restaurant, then you may now understand why sometimes your job do not start instantly as in your laptop.

The scheduler used in this lesson is Slurm. Although Slurm is not used everywhere, running jobs is quite similar regardless of what software is being used. The exact syntax might change, but the concepts remain the same.

Running a Batch Job

The most basic use of the scheduler is to run a command non-interactively. Any command (or series of commands) that you want to run on the cluster is called a job, and the process of using a scheduler to run the job is called batch job submission.

In this case, the job we want to run is just a shell script. Let’s

create a demo shell script to run as a test. The landing pad will have a

number of terminal-based text editors installed. Use whichever you

prefer. Unsure? nano is a pretty good, basic choice.

BASH

userid@ln03:~> nano example-job.sh

userid@ln03:~> chmod +x example-job.sh

userid@ln03:~> cat example-job.shOUTPUT

#!/bin/bash

echo -n "This script is running on "

hostnameOUTPUT

This script is running on ln03This job runs on the login node.

If you completed the previous challenge successfully, you probably

realise that there is a distinction between running the job through the

scheduler and just “running it”. To submit this job to the scheduler, we

use the sbatch command.

OUTPUT

sbatch: Warning: Your job has no time specification (--time=) and the default time is short. You can cancel your job with 'scancel <JOB_ID>' if you wish to resubmit.

sbatch: Warning: It appears your working directory may be on the home filesystem. It is /home2/home/ta114/ta114/userid. This is not available from the compute nodes - please check that this is what you intended. You can cancel your job with 'scancel <JOBID>' if you wish to resubmit.

Submitted batch job 286949Ah! What went wrong here? Slurm is telling us that the file system we

are currently on, /home, is not available on the compute

nodes and that we are getting the default, short runtime. We will deal

with the runtime later, but we need to move to a different file system

to submit the job and have it visible to the compute nodes. On ARCHER2,

this is the /work file system. The path is similar to home

but with /work at the start. Lets move there now, copy our

job script across and resubmit:

BASH

userid@ln03:~> cd /work/ta114/ta114/userid

userid@uan01:/work/ta114/ta114/userid> cp ~/example-job.sh .

userid@uan01:/work/ta114/ta114/userid> sbatch --partition=standard --qos=short example-job.shOUTPUT

Submitted batch job 36855That’s better! And that’s all we need to do to submit a job. Our work

is done — now the scheduler takes over and tries to run the job for us.

While the job is waiting to run, it goes into a list of jobs called the

queue. To check on our job’s status, we check the queue using

the command squeue -u userid.

OUTPUT

JOBID USER ACCOUNT NAME ST REASON START_TIME T...

36856 yourUsername yourAccount example-job.sh R None 2017-07-01T16:47:02 ...We can see all the details of our job, most importantly that it is in

the R or RUNNING state. Sometimes our jobs

might need to wait in a queue (PENDING) or have an error

(E).

The best way to check our job’s status is with squeue.

Of course, running squeue repeatedly to check on things can

be a little tiresome. To see a real-time view of our jobs, we can use

the watch command. watch reruns a given

command at 2-second intervals. This is too frequent, and will likely

upset your system administrator. You can change the interval to a more

reasonable value, for example 15 seconds, with the -n 15

parameter. Let’s try using it to monitor another job.

BASH

userid@uan01:/work/ta114/ta114/userid> sbatch --partition=standard --qos=short example-job.sh

userid@uan01:/work/ta114/ta114/userid> watch -n 15 squeue -u useridYou should see an auto-updating display of your job’s status. When it

finishes, it will disappear from the queue. Press Ctrl-c

when you want to stop the watch command.

Where’s the Output?

On the login node, this script printed output to the terminal — but

when we exit watch, there’s nothing. Where’d it go? HPC job

output is typically redirected to a file in the directory you launched

it from. Use ls to find and read the file.

Customising a Job

The job we just ran used some of the scheduler’s default options. In a real-world scenario, that’s probably not what we want. The default options represent a reasonable minimum. Chances are, we will need more cores, more memory, more time, among other special considerations. To get access to these resources we must customize our job script.

Comments in UNIX shell scripts (denoted by #) are

typically ignored, but there are exceptions. For instance the special

#! comment at the beginning of scripts specifies what

program should be used to run it (you’ll typically see

#!/bin/bash). Schedulers like Slurm also have a special

comment used to denote special scheduler-specific options. Though these

comments differ from scheduler to scheduler, Slurm’s special comment is

#SBATCH. Anything following the #SBATCH

comment is interpreted as an instruction to the scheduler.

Let’s illustrate this by example. By default, a job’s name is the

name of the script, but the --job-name option can be used

to change the name of a job. Add an option to the script:

OUTPUT

#!/bin/bash

#SBATCH --job-name new_name

echo -n "This script is running on "

hostname